A key goal we are pursuing at AI2 is a teachable reasoning system for question-answering (QA) — one that a user can interact with to see how the system arrived at its answers, debug errors, and correct them so that the system gradually improves over time. Achieving this requires three things: First, the system needs to be able to explain its reasoning in a faithful, comprehensible way, including articulating its own internal “beliefs” that led to an answer. Second, if the system makes a mistake, users need to be able to identify which system beliefs were in error, and correct them. Finally, user corrections need to persist in a memory, so that the system can continually improve over time.

In this talk I will describe our work in these exciting directions, how we train language models to reason systematically in natural language, and I will outline what works, what does not, and where new effort is needed. Finally I’ll speculate on future opportunities for building life-long learning agents using these technologies, a step towards a grand goal of AI.

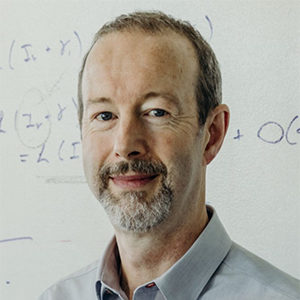

Peter Clark is a Senior Research Director and the interim CEO at the Allen Institute for AI (AI2), and he leads the Aristo Project. His work focuses on natural language processing, machine reasoning, world knowledge, and the interplay between these three areas.