Data doesn’t speak for itself, especially when it comes to complex, 3D data. Trained professionals translate it into visuals we can understand, whether that’s a CT scan of a broken leg, a satellite image of a storm, or a map of the galaxy.

Chaoli Wang, professor of computer science and engineering at the University of Notre Dame, and his lab have devised a new software system that puts users in the driver’s seat. By simply typing or speaking instructions, users can interact with data in sophisticated and creative ways, effectively democratizing 3D visualization.

The team’s paper has been selected for the Best Paper Award at the IEEE VIS 2025 Conference and will appear in IEEE Transactions on Visualization and Computer Graphics in January 2026.

“By harnessing the power of large language models—like ChatGPT—and AI agents for visualization, our software makes it possible for users to query a 3D dataset, see visualization results, and get answers to their questions right on the spot,” said Wang.

Wang said that his team deals with 3D volumetric datasets—collections of data that represent information in three dimensions, where each element (called a voxel, short for volume pixel) stores a value at a specific position in 3D space.

Each voxel, said Wang, may encode properties such as intensity, color, density, or material type, and this volumetric data is common in medical imaging and scientific simulations such as fluid dynamics, astrophysics, and climate models.

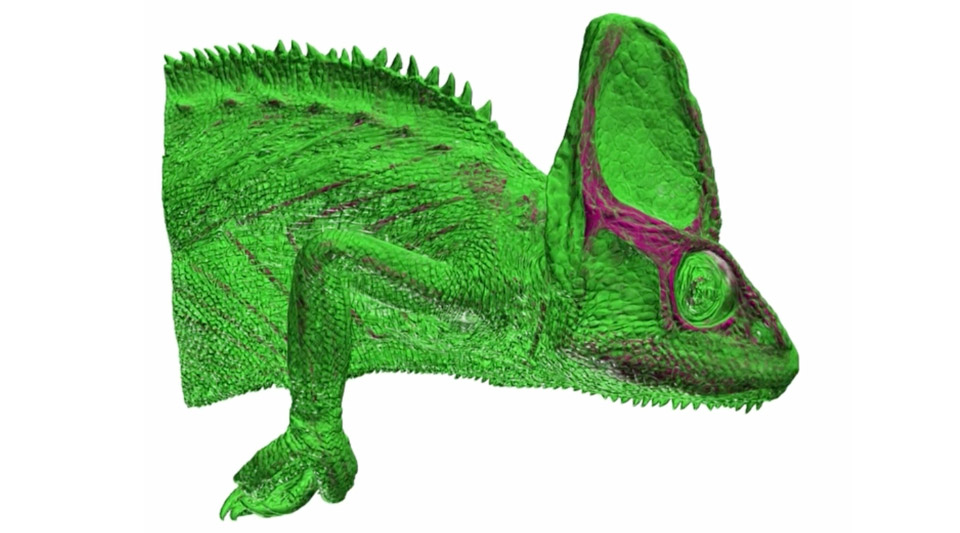

In the research described in the team’s paper, users explore complex datasets ranging from CT scans of a fish and a chameleon to numerical simulations of a hurricane and the Earth’s mantle. Using a natural language interface, they can adjust color, opacity, lighting, and alter viewing angles to highlight specific structures and refine views. The system responds to a wide range of queries—even playful ones such as: “Transform the entire fish into a cyborg.”

In the example videos above, users can easily navigate complex biological datasets of a carp (left) and chameleon (right) with Wang’s AI-assisted system.

The system accomplishes this by combining multiple AI agents with a new rendering technique—3D Gaussian splatting, which turns millions of tiny, fuzzy dots into realistic 3D scenes quickly and smoothly.

Since this system trains on multiple 2D rendering images captured from different viewpoints, rather than the usual memory-hungry 3D volumetric data, it is far less computationally intensive.

Wang, together with his Ph.D. students Kuangshi Ai and Kaiyuan Tang, will receive the Best Paper Award at the IEEE VIS 2025 Conference in Vienna, Austria, in November. Five papers will be honored as Best Papers from among 537 submissions.

Wang’s AI+VIS research work is supported by the National Science Foundation and the Department of Energy.

— Karla Cruise, Notre Dame Engineering