Visitors to the Smithsonian National Museum of Natural History in Washington, D.C., were recently invited to step into the shoes of synthetic personas—fictional yet data-driven characters created using generative artificial intelligence (AI)—to explore the real-world consequences of digital privacy decisions in the era of AI.

The one-day interactive exhibition, “Privacy, Security, and Safety in Digital Space,” took place as part of the Smithsonian’s Expert Is In program, which offers visitors to the museum the opportunity to chat with leading scientists and experts from around the nation.

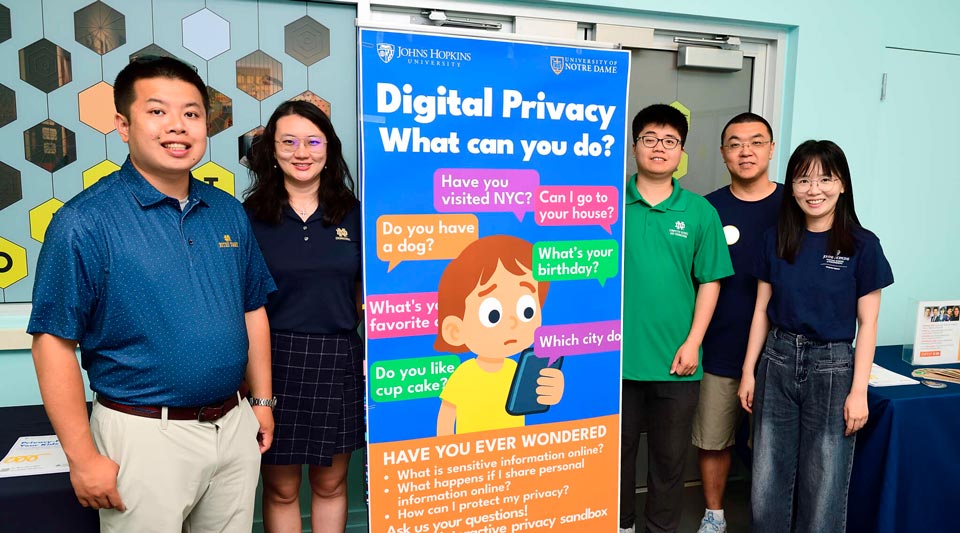

The exhibit, which featured an interactive tool designed for children ages 6 and up, was developed by researchers from the University of Notre Dame and Johns Hopkins University, as part of a multi-year National Science Foundation (NSF)–funded project aimed at transforming how users and developers understand and engage with privacy.

Photo Credit: Will Kirk/Johns Hopkins University

Through a user-friendly software interface, the researchers encouraged children and youth to think more critically about the personal data they share online—while also fostering open conversations within families about online safety, privacy, and responsible digital behavior. The team provided families with take-home resources to extend the learning beyond the museum and support continued dialogue at home.

“Privacy can often feel abstract and invisible, where users’ actions are influenced by their psychological and cognitive biases that create gaps between behaviors, attitudes, and knowledge,” said Toby Li, director of the Lucy Family Institute for Data & Society’s Human-Centered Responsible AI (HRAI) Lab and assistant professor of Computer Science and Engineering at the University of Notre Dame. “But when users step into the shoes of a relatable, human-like persona, the consequences start to feel real—and that changes how people think and act. We aim to develop this approach to support individuals in reflecting on their online behaviors and making informed decisions that align with their true preferences, interests, and values.”

Li serves as a principal investigator on the NSF-funded project, which focuses on developing metrics, guidelines, and conceptual frameworks for empathy-based approaches that foster privacy and security in cyberspace. Collaborating with Julia Qian, associate advising professor and director of advising strategy, assessment, and policy at the University of Notre Dame’s College of Engineering, Chaoran Chen, a Ph.D. student of computer science and engineering at Notre Dame, and co-lead Yaxing Yao, assistant professor of computer science and director of the Hopkins Privacy and Security Lab at Johns Hopkins University, the team seeks to better-understand how empathy can be effectively invoked to influence user behavior.

The exhibit’s software interface was developed from foundational results of a privacy sandbox where users navigated websites through a plug-in of AI-generated identities, documenting their daily interactions and permission decisions. Researchers then analyzed the data to explore how different synthetic personas impacted decision-making and system responses.

Photo Credit: Will Kirk/Johns Hopkins University

During the exhibit, more than 150 museum visitors explored the tool’s simulations, which used games, direct messages, and group chats to simulate situations where users might be prompted to share personal information—with strangers, friends, or even unknown data collectors.

Through public engagement opportunities, the team’s work is closing the gap in privacy education for communities that are often left behind—youth, seniors, lower-income families, and low-resource software developers– an initiative at the heart of the HRAI Lab, and in alignment with the Lucy Family Institute for Data & Society’s strategic plans.

“This exhibit exemplifies the Lucy Family Institute’s mission to bridge cutting-edge research with real-world impact,” said Nitesh Chawla, founding director of the Lucy Family Institute for Data & Society and the Frank M. Freimann Professor of Computer Science and Engineering. “By translating complex ideas about digital privacy and AI into engaging, relatable experiences, we are not only educating the next generation but also creating a more responsible and inclusive digital future for all.”

As part of the NSF project’s broader impact goals, the researchers are driving the University of Notre Dame’s commitment to creating meaningful interactions between science research and the public and empowering informed decision-making.

“Our vision is to empower individuals of all ages and backgrounds with the knowledge and confidence to navigate the digital world responsibly,” said Qian. “Through STEM education outreach and workforce upskilling, we aim to foster a more informed and ethically grounded digital society. By bringing these initiatives into public spaces like the Smithsonian, we are not only expanding access but also advancing national awareness and readiness in computer and AI literacy.”

To learn more about the Lucy Family Institute for Data & Society’s HRAI Lab, please visit the website.

Originally posted at lucyinstitute.nd.edu by Christine Grashorn on August 19, 2025.