Computers constantly transfer information between processing and memory units, and this shuttling back and forth of data consumes a lot of energy.

Siddharth Joshi, assistant professor of computer science and engineering at the University of Notre Dame, and Ph.D. student Clemens Schafer, are part of an international team of researchers who have designed and built a chip that runs computations directly in memory, thus eliminating the energy-intensive data movement in current hardware designs.

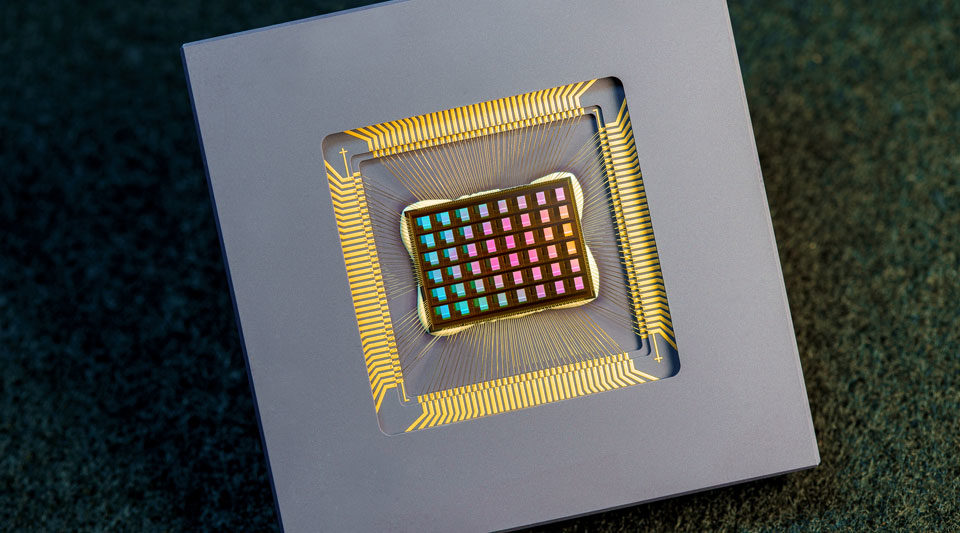

The team’s NeuRRAM chip is one of the first versatile, highly accurate compute-in-memory chips that can perform AI tasks, such as image classification, speech recognition, and image reconstruction, using a fraction of the energy consumed by other platforms. NeuRRAM is twice as energy efficient as state-of-the-art compute-in-memory chips.

The results of the research appear in the Aug. 17 issue of Nature. The research team, co-led by bioengineers at the University of California San Diego, also included researchers from Stanford University and Tsinghua University.

Making energy efficient chips is increasingly important, as power-hungry AI finds its way into more and more apps and devices such as virtual assistants, smart watches and VR headsets.

Battery-operated devices lack the computing power necessary to handle AI tasks, so they off-load their data processing needs onto an already overburdened cloud.

The NeuRRAM chip uses “edge AI” to make devices less cloud dependent. “Edge AI is the deployment of artificial intelligence applications in devices throughout the physical world,” said Joshi.

“Its name refers to the fact that AI computation is done near the user’s data at the edge of the network, rather than centrally in a cloud computing facility or private data center.”

Smart devices send a continuous stream of audio files and images to the cloud, and that journey from device to cloud makes data more vulnerable to interception and monitoring. By keeping the user’s data closer to the user, the NeuRRAM chip can help maintain data privacy.

Previous compute-in-memory chips were either efficient or versatile—not both. “One of the key places where we overturned conventional wisdom is this efficiency-versatility trade off, said Joshi, director of the Intelligent Microsystems Lab.

“We showed that it’s possible to get efficient chips that don’t require this sacrifice of wide applicability. Our work is applicable to a range of machine learning and AI algorithms, not just one.”

Notre Dame computer scientists have been looking for a way to use memory as computational workspace for a long time, Joshi said. He identifies Peter Kogge, the Ted H. McCourtney Professor of Computer Science and Engineering at Notre Dame, as a pioneer in this field, who made significant contributions as early as the 1990s in designing chips that integrated both memory and logic into processing-in-memory architectures.

While the neuromorphic NeuRRAM chip represents the very latest in compute-in-memory design, Joshi does not see it as the last word. “In the process of building this chip and making so many measurements, we developed new techniques for doing computation,” Joshi said. “Now, we have a better understanding of how to improve these chips even more.”

— Karla Cruise, College of Engineering